Your guide to applying advertising effectiveness benchmarks

The need for advertising campaign benchmarks

There’s no place where staying ahead of the curve is more critical than deciding when and how to use advertising effectiveness benchmarks (AKA norms) for evaluating advertising performance. Organizations need answers to the below questions - and many others - in order to be convinced that one’s marketing team, collateral, and strategy are truly successful.

- Are these new advertising campaigns better than what we’ve run before?

- How do our advertisements stack up to our competitors?

- How much incremental business growth can we attribute to these ads?

- Should we continue growing our investment in this marketing channel?

To answer these types of questions, normative benchmarks are absolutely essential. Benchmarks, by nature, are an amalgamation of data from historical ad campaigns that [presumably] tell researchers what ‘good’ and ‘poor’ performance look like. Whether these historical ads are from one’s own brand, immediate competitors, or the larger industry, their data enables answering a fairly straightforward question: are my specific advertisements outperforming a validated baseline? Unfortunately, if your norms are based on ancient data, outdated platforms, or invalidated assumptions, their value is effectively null - if not negative - for the organization.

The need for a playbook to apply benchmarks effectively

It follows that marketers need a strong playbook when thinking about the analysis and application of normative benchmarks.

- In the absence of a playbook, different leaders may fundamentally disagree on the proper approach to take for ad performance assessments.

- In the presence of a suboptimal playbook, leaders may incorrectly evaluate advertising campaign effectiveness in ways that drive down long-term business success.

- In the presence of a validated playbook, leaders can socialize and engage a consistent assessment approach that enables strong organizational decision-making.

In this article, we outline a validated playbook for marketers, researchers, and their partners to use when leveraging norms for advertising effectiveness. By following the tenets of this playbook, your marketing team will be in a much stronger position to defend and communicate their ROI to stakeholders. And by establishing a strong ROI within the organization, marketers can effectively petition for the incremental budget, headcount, and creative resources that will drive their department to new heights.

How can ad effectiveness benchmarks be effectively leveraged?

Once critical questions about the quality, relevance, and applicability of benchmark data are effectively answered ( see our larger report here), marketers face the seemingly endless possibilities of how to apply normative benchmarks to their campaigns. With an ocean of historical marketing campaigns to leverage, and multitude of analyses to consider, it’s important to prioritize benchmarking efforts that target critical organizational questions.

- How are we doing against ourselves? For some organizations, performance is heavily focused on internal evolution. Stakeholders here are most interested in seeing incremental success by showcasing meaningful gains against previous internal efforts.

- How are we doing against others? For other organizations, performance is all about the competition. Stakeholders want to see performance that exceeds its closest rivals, and success is only determined when the company’s campaigns are better than the rest.

- How can we maintain and improve our performance? Still for others, performance is about consistency. With strong historical campaigns under their belt and solid market share, stakeholders want more of the same. Incremental gains would be great, but as long as campaigns are not falling behind, steady-state is ideal.

So, with fear of stating the obvious, your first step is to consider what type of organization you are - or what you aspire to be. If you’re a market leader with a legacy brand to consider, analyses tied to internal performance over time may be prioritized. If you’re an emerging brand with a story to tell, competitive performance on key awareness and brand recognition metrics is likely in order. And if you’re fighting for limited organizational dollars, you might try to prioritize - well - everything!

But what are some ideal ways to leverage a high-quality normative dataset? And more importantly, how can these approaches help you answer the critical organizational questions above? In our opinion, marketers should hone in on five primary applications:

A. Look at yourself in the mirror: how am I doing against myself?

B. Consider overall benchmarks: can industry-wide averages make a point?

C. Compare to your category and industry: what’s specific, but not too specific?

D. Prioritize your metrics: can I isolate a few core KPIs for my business?

E. De-aggregate to deconstruct your goals: how can distributions add value?

Below, we unpack each of these use cases, and explain how each powers a unique and insightful view of campaign performance.

A: Look at yourself in the mirror

While the vast majority of our playbook is focused on leveraging norms from the broader industry (see sections B-E), the first place to look for a clear view of performance is directly at oneself. Within-brand comparisons are distinctly powerful, and enable contextualized views of performance that no normative database can provide. That said, in order to maximize the impact of this ‘self-assessment’, it’s critical that your ad effectiveness measurement is sourced from a highly-consistent location and methodology.

![]() Single-source data is the optimal method for ensuring accurate comparisons across campaigns. If you’re testing brand lift for some campaigns with Partner A, and others with Partner B, comparing the results across partners is rife with confounding variables. However, if you’ve been able to consolidate your testing to a singular partner, and you have a solid number of campaign studies under your belt, you can start peering into your own brand’s benchmarks to see what ‘good’ looks like.

Single-source data is the optimal method for ensuring accurate comparisons across campaigns. If you’re testing brand lift for some campaigns with Partner A, and others with Partner B, comparing the results across partners is rife with confounding variables. However, if you’ve been able to consolidate your testing to a singular partner, and you have a solid number of campaign studies under your belt, you can start peering into your own brand’s benchmarks to see what ‘good’ looks like.

Let’s say you’ve run 10 ad effectiveness projects with a singular research provider in the past year. Assuming these campaigns have distinct similarities (channel reach, spend, target audience, etc.), it’s entirely fair to build a pseudo-benchmark for those projects so that you can start comparing to what's ‘typical’ or ‘average’. To control for outliers - really great or really poor campaigns - you should take the median lift score you’ve observed across campaigns for a given metric, and treat that as your running benchmark. As you conduct new campaigns or evaluate historical ones, you can now form meaningful conclusions about success and failure.

B: Consider overall benchmarks

Once they’ve had a good look at their own data, most marketers would prefer to jump right into a very specific set of categories or domains for benchmarking purposes. You want to know how you’re doing against your most successful competitors, and that’s a pretty natural inclination. That said, before jumping head-first into those more specific areas of analysis, it’s critical to take a long look at the overall benchmarks available to you at the highest levels of aggregation.

Why are overall benchmarks valuable? We list out a few factors below, some of which make them potentially more valuable than any other type of benchmarking data:

![]() Scale: Because they utilize data from any campaign in the overall normative dataset, overall benchmarks have tremendous scale relative to more specific categories or industries. This sheer size improves the reliability of your benchmark information, giving marketers increased confidence in the exact norm for any given metric. There’s huge power in numbers, and using overall benchmarks ensures you’re leveraging as many numbers as possible when determining campaign success and failure.

Scale: Because they utilize data from any campaign in the overall normative dataset, overall benchmarks have tremendous scale relative to more specific categories or industries. This sheer size improves the reliability of your benchmark information, giving marketers increased confidence in the exact norm for any given metric. There’s huge power in numbers, and using overall benchmarks ensures you’re leveraging as many numbers as possible when determining campaign success and failure.

![]() Competitive framing: From a storytelling standpoint, there’s something extremely powerful about holding your brand or your company against every other company in the marketing universe. Instead of constraining one’s ambitions to an ‘excellent CPG advertiser’ or an ‘above-average airline ad’, comparing oneself to campaigns across all industries sets a higher bar of achievement. Consumers don’t walk around thinking ‘this ad is pretty good for a services organization’ - they’re thinking about whether this ad breaks through the everyday media clutter. Using overall benchmarks helps marketers determine if their brand is indeed breaking through this digital clutter and driving meaningful consumer impact.

Competitive framing: From a storytelling standpoint, there’s something extremely powerful about holding your brand or your company against every other company in the marketing universe. Instead of constraining one’s ambitions to an ‘excellent CPG advertiser’ or an ‘above-average airline ad’, comparing oneself to campaigns across all industries sets a higher bar of achievement. Consumers don’t walk around thinking ‘this ad is pretty good for a services organization’ - they’re thinking about whether this ad breaks through the everyday media clutter. Using overall benchmarks helps marketers determine if their brand is indeed breaking through this digital clutter and driving meaningful consumer impact.

![]() Stability: Similar to the stock market, if you’re tracking one company / industry benchmark in isolation, you’re going to see more short-term fluctuations. Benchmarks that track the entire marketing universe are much more likely to be stable, and are much less open to changes that occur across specific industry sectors. Organizational leaders are often confused if / when benchmarks move significantly across quarters or years, as their ads go from ‘good’ to ‘bad’ when nothing notable changes in the collateral or larger marketplace. Leveraging overall benchmarks decreases the risk of such fluctuations, and ensures solid returns on your benchmarking investments.

Stability: Similar to the stock market, if you’re tracking one company / industry benchmark in isolation, you’re going to see more short-term fluctuations. Benchmarks that track the entire marketing universe are much more likely to be stable, and are much less open to changes that occur across specific industry sectors. Organizational leaders are often confused if / when benchmarks move significantly across quarters or years, as their ads go from ‘good’ to ‘bad’ when nothing notable changes in the collateral or larger marketplace. Leveraging overall benchmarks decreases the risk of such fluctuations, and ensures solid returns on your benchmarking investments.

C: Compare to your category and industry

At the end of the day, no marketer is going to be fully satisfied with ‘overarching’ norms. They want to know how they’re stacking up against the biggest players in their category or industry. As such, category-level and industry-level benchmarks are an important part of a marketer’s playbook, as they can help drive deeper insights and more compelling stories for their stakeholders.

Let’s consider an example of a major CPG brand who has just launched a new product. Internally, they know the product is a huge hit waiting to happen, and they’re equally bullish on the state of their ad campaign. As such, they partner with a preferred research provider, and assess how the new advertisements lift various brand metrics. After the campaign concludes, they are able to see a 3-point lift in purchase intent when comparing exposed and matched-control consumers. Great result right? Well, it depends on what they decide to compare these results against:

They compare against the overall benchmark

They’ll be pleased to see that the overall benchmark for purchase intent across every campaign tested in this manner is +2 points. So, their marketing strategy effectively outpaces industry-level results by 50%. As such, their decision to leverage an overall benchmark led to a positive, encouraging result that would reinforce the idea of continuing heavy investment in the ad spots.

They compare against a more specific category benchmark

They’ll be disappointed to see that the benchmark for the ‘consumables’ category is +4 points of purchase intent. In other words, they’re underperforming their category by 25%. When they share this information with stakeholders, the mutual decision is made to keep the campaign up [as it is working overall], but to swap out the existing creative in favor of other, more successful, ads that better showcase the brand's differentiation in the category.

The point of the above example is this: category benchmarks are not always consistent with larger - and potentially more reliable - overall norms. Effectively understanding, communicating, and tracking these discrepancies is a core part of good benchmark applications. Some common differences by category and segment include:

Specific metrics are more / less challenging to lift due to category nuances

E.g., Moving aided awareness for large CPG companies is very tough

Competitive players have wildly different ad spend - and therefore performance

E.g., Comparing oneself to categories with large-scale Silicon-Valley tech companies

Buy / sell cycles vary heavily across industries - impacting different metrics

E.g., DTC brands differ from others on reliance of owned vs. third-party e-commerce

D: Prioritize your metrics

If you're lucky enough to be working with a research provider who has multiple ad-effectiveness metrics, a humbling challenge is merely focusing your attention on a smaller subset of benchmarks. While every normative metric can have incremental value to your organization, sharing 5, 10, or even 15 different benchmarks with stakeholders is not likely to go well. Leaders need distinct, actionable metrics that they can link to bottom-line performance, so highlighting specific metrics will positively impact analysis reception.

But how should someone go about picking-and-choosing the right metrics? Again, this primarily depends on the nuances of your specific stakeholders, company goals, and industry you occupy. Large brands may not need to focus on awareness, as their top-of-funnel performance is not at issue (nor is it a focus of the advertising itself). Instead, they may see opportunity for growth on lower-funnel metrics like purchase intent and site visitation. In contrast, emerging brands may get tremendous value out of both tracking and benchmarking their awareness lift, as down-funnel metrics may be artificially constrained by current awareness numbers.

At the end of the day, the key advertising metrics you focus on should be the ones (1) your campaign is trying to move and (2) your leaders care about most. Align with your organization and set clear goals towards the movement of these metrics, and focus reporting on difference scores between your campaigns and these metrics’ benchmarks. With heightened attention and recurring examinations, the entire organization will be well-socialized on the metrics that matter, and positive performance will have a high likelihood of being noticed.

E: De-aggregate to deconstruct your goals

Normative benchmarks derive the majority of their power from aggregation: by assessing the median value across a wide array of distinct campaigns, advertisers get an increasingly reliable picture of what “typical” or “good” tends to look like. However, this type of analysis is only one way to derive meaningful insights from a large, diverse dataset. On the opposite side of the analysis spectrum lies de-aggregation: examining the distribution of brand and outcome lift scores received by individual campaigns.

Examining the distribution (rather than the median) is valuable for a few key reasons:

It allows a more realistic look at campaign success over time. A truly successful, multi-layered campaign is full of good ideas, mediocre ones, and unfortunately a few bad apples. As such, when evaluating marketing campaigns against relatively static normative benchmarks, advertisers may expect more consistency than is typically seen in the industry. Some marketing campaigns will fail to generate meaningful results, others will need firm optimizations, and a select few will blow the doors off on day one.

It offers a wider range of potential “benchmarks” to compare against. If the median benchmark value is +2 points, but some campaigns are able to achieve +5 or even +10 points of lift, there is more potential for stretching one’s department and organization towards even bigger goals. While achieving a campaign lift score above the median benchmark may be most appropriate when considering an entire portfolio of campaigns, there may be situations where higher bars should be cleared based on planning, spend, and other factors.

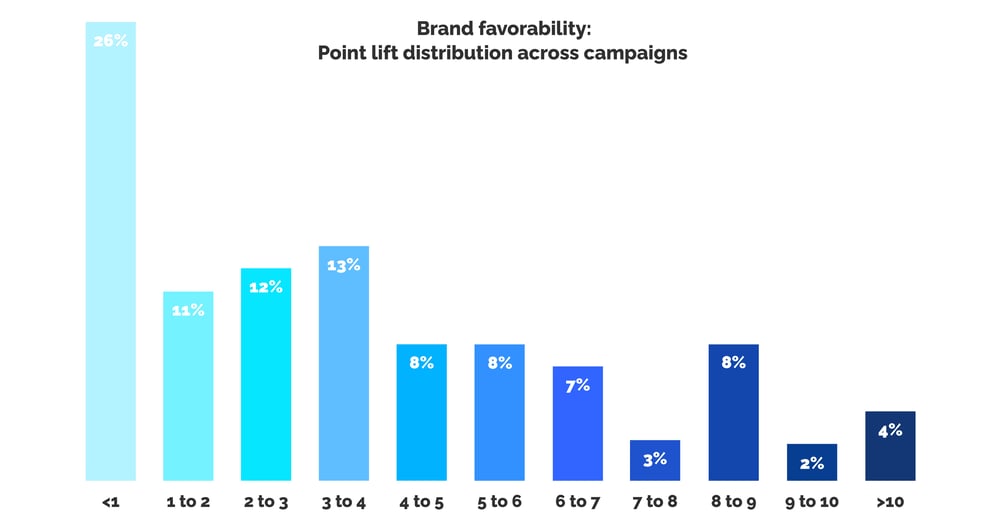

Below we show an example to illustrate the power of de-aggregation. Here, we have the lift scores for hundreds of campaigns tested in 2021-2022, all of which included ‘favorability’ in their brand lift assessment. Our normative benchmark for brand favorability lift across all campaigns is approximately 3.2 points: on average, a typical campaign of moderate success will see favorability increase by ~3 points as a result of dedicated advertising.

DISQO’s Normative Benchmarks for Advertising Effectiveness, Favorability Lift Scores by Campaign.

However, a quick look at the histogram above shows that~25% of campaigns fail to achieve even 1 point of lift on this metric. In addition, we see that ~15% of campaigns saw lift scores exceeding 8, 9, and even 10 points. In other words, the median value - here it’s 3.2 - hides some valuable nuance about what ‘typical’ campaigns often see on the lower and higher ends of the scale.

Beating the benchmark? Instead of treating any score above benchmark as a win, compare scores within your own brand campaigns to see whether a benchmark in that smaller set can push your team towards further success. Furthermore, because oft-repeated ad exposures may turn off otherwise interested customers, shooting for a multitude of moderately-positive lift activations may prove more valuable than a few high-lift versions.

Not meeting the benchmark? Due to company size, ad spend, or target markets, your brand may be constrained in ways that make typical lift scores an aspirational goal as your brand grows. In this circumstance, de-aggregation highlights that low lift scores are not uncommon, and that incremental improvements are entirely justified.

Checklist for analyzing normative benchmarks

Where are you engaging with benchmarks to drive organizational value?

- Looking brand in the mirror and building self-benchmarks

- Considering overall benchmarks to set higher-level goals

- Comparing to relevant category and industry benchmarks

- Prioritizing key metrics from many to a select few

- Ad effectiveness benchmarks are used optimally for decisions

Missing something from the checklist? Not to worry! DISQO has quarterly ad effectiveness benchmarks that can replace your suboptimal legacy solutions. With full-funnel measurability, cross-channel visibility, and near-term recency, these benchmarks are designed to help marketers discover what ‘good’ looks like within a modern ad campaign, and to test themselves against that standard. On top of that, we publish regular reporting on our benchmark data, helping clients think through new and unique applications of our industry-leading product.

Instead of relying on antiquated solutions, come learn more about how DISQOs ad effectiveness benchmarks are setting a new standard for campaign evaluation. Contact us for a walkthrough today, and download our most recent report to see what you might be missing.

Related Material

Report

Brand and Outcomes Lift Industry Benchmarks

The only full-funnel normative benchmarks for ad effectiveness

Get the report

Subscribe now!

Get our new reports, case studies, podcasts, articles and events